Maybe others are in the same boat. Until ChatGPT took the world by storm, I didn't have specific plans to write about AI at a granular level. Now my students and in-laws are asking about it. I have to write essays explaining how AI affects my work. The cases are cropping up. For others seeking to write about AI, and especially generative AI, I am sharing links to some articles I found helpful for understanding basic issues, as well as excerpts from an interview I did with Professor Charlotte Tschider, where I asked her questions about AI that she patiently answered.

Written Description

Patent & IP blog, discussing recent news & scholarship on patents, IP theory & innovation.

Tuesday, January 23, 2024

Wednesday, December 20, 2023

Guest post by Gugliuzza, Goodman & Rebouché: Inequality and Intersectionality at the Federal Circuit

Guest post: Paul Gugliuzza is a Professor of Law at Temple University Beasley School of Law, Jordana R. Goodman is an Assistant Professor of Law at Chicago-Kent College of Law and an innovator in residence at the Massachusetts Institute of Technology, and Rachel Rebouché is the Dean and the Peter J. Liacouras Professor of Law at Temple University Beasley School of Law.

This post is part of a series by the Diversity Pilots Initiative, which advances inclusive innovation through rigorous research. The first blog in the series is here, and resources from the first conference of the initiative are available here.

The ongoing reckonings with systemic racism and sexism in the United States might seem, on first glance, to have little to do with patent law. Yet scholarship on racial and gender inequality in the patent system is growing. Recent research has, for example, shown that women and people of color are underrepresented among patent-seeking inventors and among lawyers and agents at the PTO. In addition, scholars have explored racist and sexist norms baked into the content of patent law itself.

In a new article, we empirically examine racial and gender inequality in what is perhaps the highest-stakes area of patent law practice: appellate oral argument at the Federal Circuit.

Unlike many prior studies of inequality in the patent system, which look at race or gender in isolation, our article looks at race and gender in combination. The intersectional approach we deploy leads to several new insights that, we think, highlight the importance of getting beyond “single-axis categorizations of identity”—a point Kimberlé Crenshaw made when introducing the concept of intersectionality three decades ago.

The dataset we hand built and hand coded for our study includes information about the race and gender of over 2,500 attorneys who presented oral argument in a Federal Circuit patent case from 2010 through 2019—roughly 6,000 arguments in total. Our dataset is unique not only because it contains information about both race and gender but also because it includes information about case outcomes, which allows us to assess whether certain cohorts of attorneys win or lose more frequently at the Federal Circuit.

Perhaps unsurprisingly, we find that the bar arguing patent appeals at the Federal Circuit is overwhelmingly white, male, and white + male, as indicated below, which break down, in a variety of ways, the gender and race of the lawyers who argued Federal Circuit patent cases during the decade covered by our study. (Note that the figures report the total number of arguments delivered by lawyers in each demographic category. Note also that the number of arguments we were able to code for the race of the arguing lawyer was slightly smaller than the number of arguments we were able to code for the gender of the arguing lawyer, so the total number of arguments reported on the figures differ slightly.)

What is surprising, however, is that the racial and gender disparities illustrated above dwindle when we look only at arguments by lawyers appearing on behalf of the government, as shown below, which limit our data only to arguments by government lawyers. (About 75% of those government arguments were by lawyers from the PTO Solicitor’s Office; the others came from a variety of agencies, including the ITC and various components of the DOJ.)

Guest post by Heath, Seegert & Yang: Open-Source Innovation and Team Diversity

Guest post by Davidson Heath, Assistant Professor of Finance, Nathan Seegert, Associate Professor of Finance, and Jeffrey Yang. All authors are with the University of Utah David Eccles School of Business.

This post is part of a series by the Diversity Pilots Initiative, which advances inclusive innovation through rigorous research. The first blog in the series is here, and resources from the first conference of the initiative are available here.

Diversity in innovation is essential. Varied perspectives, experiences, and skills foster creativity and problem-solving. Diverse teams are more likely to challenge assumptions, leading to novel solutions and breakthroughs. Variety in tastes and background can help identify and serve a wide range of user needs.

Open-source software (OSS) is often praised for its ability to foster innovation. Part of the rationale is that OSS allows for open collaboration, enabling continuous improvement and adaptation by a diverse community. For example, a vast garden of open-source large language models such as Meta’s Llama 2 are flourishing and are projected to surpass closed-source AI in the near future.

Figure 1. Capabilities of Machine Learning Models: Open vs. Closed-Source

The open-source collaborative model has accelerated innovation in many fields. Yet to date, we know little about how these teams form, and how their diversity impacts productivity. How does the diversity of OSS teams compare to the overall contributor pool? And what are the productivity outcomes for OSS teams that increase their diversity compared to those that do not?

Wednesday, September 27, 2023

Guest Post by Paola Cecchi Dimeglio: An Invitation to Inclusive Innovation

Guest post by Dr. Paola Cecchi Dimeglio, Chair of the Executive Leadership Research Initiative for Women and Minorities Attorneys at Harvard Law School and Harvard Kennedy School

This post is part of a series by the Diversity Pilots Initiative, which advances inclusive innovation through rigorous research. The first blog in the series is here, and resources from the first conference of the initiative are available here.

Virtual reality, AI chatbots, and other emerging technologies are fueling a drive to innovate, improve, and patent new products and services that are inclusive from the beginning. This goal is not only morally right but also economically essential; inclusive innovation has become a multibillion-dollar necessity. However, engaging diverse inventors at large technology companies still presents layers of challenges.

In 2022, the USPTO reported a 32% growth in the number of U.S. counties where women patented over the 30-year span from 1990 to 2019; in 2019, over 20% of patents issued included at least one woman inventor; similar data is not available for minority inventors.

Perhaps more than at any time in their history, technology companies are under pressure to achieve patentable breakthroughs. One factor driving the urgency of innovation is the need to create and commercialize products and services that meet the promises of emerging technologies. Once the stuff of sci-fi and fantasy, the Metaverse and humanlike generative AI have taken substantial steps out of movies and literature. Virtual and augmented reality has gone mainstream, and the premier generative AI chatbot, ChatGPT, set user records shortly after it was released late in 2022. Having sampled the Metaverse and prompted chatbots to pour out pages, the public wants more, and they want it now.

Technology giants often partner with smaller, specialized businesses for the purpose of achieving technological breakthroughs. These collaborative ventures may produce new platforms, result in extensive IP development, and spawn multiple families of products. Most often, they fail.

In the current race to innovate, businesses are looking within their ranks for beneficial patentable ideas. It makes sense, as employees at all levels of a company have a close relationship with that organization’s products, patents, and aspirations. Leaders realize that the next big invention can emerge from unexpected quarters at their businesses, and many have begun casting the net as wide as possible.

This time around is different. Innovation has to be highly inclusive at the outset. The environments, cultures, policies, and dynamics of virtual worlds have to operate without traditional biases. And AI has to think and decide without the incidents of discrimination that are dragging many businesses into court. It’s about the bottom line. A Metaverse that is not tuned to highly diverse users cannot achieve its full value potential, estimated at $936.6 billion by 2030. To realize these earnings, inclusion has to be a real part of the innovation process.

Guest Post by Jordana Goodman: Unseen Contributors: Rethinking Attribution in Legal Practices for Equity and Inclusion

Guest Post by Jordana R. Goodman, Assistant Professor at Chico-Kent College of Law

This post is part of a series by the Diversity Pilots Initiative, which advances inclusive innovation through rigorous research. The first blog in the series is here, and resources from the first conference of the initiative are available here.

According to the National Association for Law Placement, female equity partners in law firms comprised about 23% of the total equity partner population in the United States in 2022. Women made up more than half of all summer associates and have done so since 2018. Representation among intellectual property lawyers parallels this trend, with women representing about 22% of all equity partners and over 50% of all summer associates in 2019. Although there has been steady progress in hiring women attorneys at junior levels, there have not been similar increases in partner retention in the past thirty years. NALP called the partner level increases “abysmal progress,” and suggested that one reason for this failure is that “little work has been done to examine and change the exclusionary practices that create inequalities.”

If presence was the only obstacle to creating a more diverse, equitable, and inclusive legal environment, the pipeline of diverse junior associates would have begun to significantly shift the partner demographics at law firms across the country. However, because the environment within a law firm can be unfriendly to non-Caucasian, non-cisgender male, non-heterosexual lawyers, people who identify as such tend to leave legal practice at higher rates. More must be done to remedy inequities within the day-to-day practices to create an equitable legal environment.

As detailed in my study, Ms. Attribution: How Authorship Credit Contributes to the Gender Gap, allocation of credit on public-facing legal documents is not equitable. When the senior-most legal team member signs documents on behalf of their legal team, they are erasing the names of associates from the record. This widespread practice, combined with the constant perceived differences in status between male and female colleagues as well as biases related to accents, can lead to negative consequences and unequal attribution for women, people of color, and LGBTQ+ individuals. “Under-attribution of female practitioners falsely implies that women do less work, are more junior, and do not deserve as much credit as their male colleagues” and such practices must change.

Thursday, August 24, 2023

Guest Post by Suzanne Harrison: Diversity Pledge: Boosting Innovation and Competitiveness

Guest post by Suzanne Harrison, Chair of the Patent Public Advisory Committee (PPAC) at the USPTO.

This post is part of a series by the Diversity Pilots Initiative, which advances inclusive innovation through rigorous research. The first blog in the series is here, and resources from the first conference of the initiative are available here.

When we created the Diversity Pledge, our hope was to create more transparency in innovation and inventorship inclusivity, by creating a standard metric for companies to report on their DEI reports. What we have come to realize however, is that increasing diversity and inclusivity in innovation is not only an equal-opportunity social imperative, it is a common sense means to improve R&D efficiency and corporate ROI, and it is also a necessity for maintaining and increasing national competitiveness. Because we can’t afford to leave our most talented people on the sidelines, the goal must be actionable, not performative.

Guest Post by Professors Aneja, Subramani, and Reshef: Why Do Women Face Challenges in the Patent Process?

Guest post by Abhay Aneja, Assistant Professor of Law, University of California, Berkeley, Diversity Pilots Initiative Researcher, Gauri Subramani, Assistant Professor in the Department of Management, College of Business, Lehigh University and Diversity Pilots Initiative Researcher, and Oren Reshef, Assistant Professor of Strategy, Washington University in St. Louis

This post is part of a series by the Diversity Pilots Initiative, which advances inclusive innovation through rigorous research. The first blog in the series is here, and resources from the first conference of the initiative are available here.

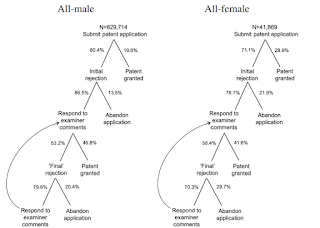

About 86% of all patent applications are submitted by men or all-male teams. This underrepresentation of women gets worse as the patent approval process runs its course. In other words, patent applications from women and teams with higher female representation are less likely to convert into granted patents. Why is this happening?

An essential feature of the patent process is that it is highly iterative, so rejection occurs often, as the figure below of the evaluative trajectory of patent applications shows. While over 80% of applications face rejection, it is crucial to note that rejection does not necessarily indicate the impossibility of moving forward with that invention. Applicants can respond to rejections and continue in the patent process. However, research indicates that female patent applicants are less likely to follow up after rejection, contributing significantly to the lower conversion rate of applications to granted patents.

Wednesday, July 12, 2023

Guest Post by Prof. Koffi: A Gender Gap in Commercializing Scientific Discoveries

Guest post by Marlene Koffi, Assistant Professor of Economics, University of Toronto and NBER Faculty Research Fellow. This post is part of a series by the Diversity Pilots Initiative, which advances inclusive innovation through rigorous research. The first blog in the series is here and resources from the first conference of the initiative are available here.

Diversity and inclusion in science and commercialization are integral to innovation, societal and economic growth. While progress has been made in increasing representation and inclusivity in STEM, there are complex factors at play that hinder a comprehensive understanding of the barriers faced by underrepresented groups in these fields. Today, I will focus on a challenging point later in the invention process: commercializing a scientific discovery. In a research study with Matt Marx, we characterize the gender dynamics of scientific commercialization in the full canon of scientific inquiry.

One of the highlights of our study is to show that, as a society, we have made lots of progress regarding gender balance in the early steps of the scientific production process. Analyzing 70 million scientific articles, we observe meaningful growth in female participation in scientific production. In 1980, barely one in five published papers included a female author. By 2020, that figure exceeded 50%. This increase represents a significant cultural shift in the scientific community. Diversity in science has been shown to stimulate innovation and promote higher recognition within the academic community. This is a win not just for the women involved but for the whole of society.

However, these gains for women early in scientific production hide potential pitfalls later. Namely, the key takeaway of our study is that a significant gender gap remains for commercializing scientific discoveries. Given that we find the gender gap in commercialization is the largest among discoveries that are more highly cited and with higher commercial potential, we title our study and refer to these uncommercialized discoveries as “Cassatts in the Attic” after the renowned female painter and printmaker Mary Cassatt.

Wednesday, June 28, 2023

Guest Post: Closing the Gender Innovation Gap with Guided Inventor Sessions

By: Kevin Ahlstrom, Associate General Counsel, Patents, Meta

(This post is part of a series by the Diversity Pilots Initiative, which advances inclusive innovation through rigorous research. The first blog in the series is here, and resources from the first conference of the initiative are available here.)Guided invention sessions not only increase idea submission rates but also transform individuals' perception of themselves as inventors. By creating a supportive environment and equipping participants with the necessary tools, these sessions pave the way for gender equality in patenting.

Women submit ideas for patenting at a lower rate than men

In 2021, I noticed that most of the ideas I received for patenting came from men. At Meta, employees are encouraged to submit patent ideas through an inventor portal. Women submitted less than 10% of the ideas I received, despite making up more than 30% of the technical and design roles in the organizations I supported. I was chatting with a research scientist about this, and I asked her why she didn’t submit more of her ideas for patenting. She said, “I tend to minimize my contributions compared to others on my team. I sometimes think that the big patentable ideas are for people above my paygrade.”

Another female UI designer said, “We are all often working on things with many other people, and so it can feel presumptuous to claim ‘ownership’ over an idea. Vying for credit can bring up yucky shame feelings in me when I have been trained by our culture to make people happy, to support others, to help.”

I realized there were stark differences between the way that I, a male patent attorney, and many of my female coworkers view the invention process and related work. There are likely many causes for this engagement gap:

- differences in social expectations between men and women;

- fewer historical female inventor role models;

- women may be implicitly penalized for claiming ownership and credit;

- women often take on the unpaid labor of home and childcare responsibilities, leaving less time or energy for patent activities.

Regardless of the cause, it was clear that I could not rely solely on our inventor portal to capture women-generated innovation.

As my team and I searched for solutions, I initially wanted to hold training sessions for women on how to submit and advocate for their ideas. That’s what the men did – they submitted frequently and argued with me frequently; consequently, I approved more of their ideas for patenting. But why should we train women to act more like men? It didn’t make sense to ask women to change their behavior to fit inside a system that wasn’t designed for them. Instead of more training, we needed a change in our system to meet innovators where they were.

The Patent Team at Meta has been working on this issue for years. Together, we have made large strides in creating a patent program that is equitable and accessible to everyone. We’ve surveyed employees to better understand their needs, we’ve revamped our inventor portal to be more inclusive, we’ve held conferences and forums to spotlight diverse inventors and encourage other companies to improve, and much more.

Wednesday, June 14, 2023

Guest Post: How We Can Bridge the Innovation Gap

By: W. Keith Robinson, Professor of Law, Faculty Director for Intellectual Property, Technology, Business, and Innovation, Wake Forest University School of Law. Watch his video proposing a Law and Technology Pipeline Consortium.

This post is part of a series by the Diversity Pilots Initiative, which advances inclusive innovation through rigorous research. The first blog in the series is here, and resources from the first conference of the initiative are available here.The patent system is a foundational part of the United States’ innovation ecosystem. The country created a national patent system in 1789. While the patent system has evolved over 200 years, it has remained stagnant in one glaring way. The number of inventors and patent professionals that are women or belong to underrepresented racial and ethnic groups is alarmingly low as compared to white men. While this disparity raises concerns about inclusivity, it also raises the possibility that there are untapped reservoirs of creativity and innovation within our borders.

For example, a 2016 study by the Innovation Technology and Innovation Foundation revealed that 3.3% of U.S.-born innovators identified as Hispanic, and 0.4% of U.S.-born innovators identified as black or African American. The same study found that women represent just 12% of U.S.-born innovators. These numbers might seem staggering to some. Others might genuinely ask why these numbers should raise concerns.

One need look no further than the changing demographics of the U.S. Census data from 2020 indicate that the share of the U.S. population that identifies as White has declined for several decades. The U.S. is becoming more diverse, and it seems this trend will continue. In Peter F. Drucker’s book, Innovation and Entrepreneurship, Drucker argues that demographics are the clearest external source of innovative opportunity. Underrepresented innovators tend to address overlooked problems that are inherent to their communities. The country’s changing demographics could provide a wealth of untapped innovative opportunities.

The question then, is what is the cause of the demographic disparity in the patent system, and how can we address it?

Wednesday, May 31, 2023

Guest Post: Charting New Paths in Innovation: Reflections from Harvard’s Innovation Economics Conference

By: Jillian Grennan, Associate Professor of Finance and Principal, Diversity Pilots Initiative

This post is part of a series by the Diversity Pilots Initiative, which advances inclusive innovation through rigorous research. The first blog in the series is here, and resources from the first conference of the initiative are available here.

Recently, I had the privilege of being part of the Junior Innovation Economics Conference at Harvard Business School. This diverse gathering of scholars from fields as varied as management, technology, economics, finance, and public policy delved headlong into the intricate dynamics of invention and innovation policy. Several researchers spoke about issues relevant for better understanding diversity and inclusion in the inventive process and how to improve it. These included: documenting gender disparities in attribution for innovative output, understanding how “opt-in” organizational processes can unlock the innovative potential of engineers from underrepresented groups, and measuring how broader representation can help bring more valuable innovations to market.

Friday, May 26, 2023

Guest Post: Bridging the Gap: IP Education for All with SLW Academy

By: Piers Blewett, Principal at Schwegman Lundberg & Woessner (SLW)

This post is part of a series by the Diversity Pilots Initiative, which advances inclusive innovation through rigorous research. The first blog in the series is here, and resources from the first conference of the initiative are available here.

Hello! I'm Piers Blewett, a principal at Schwegman Lundberg & Woessner (SLW), and a patent attorney who started in a place once known as Rhodesia, now Zimbabwe. My personal journey exposed me to the nuances of systemic change and the gap that can often exist when it comes to universal access to opportunities.

During the transitional period in Zimbabwe and later South Africa, I witnessed firsthand that systemic change does not always include broad availability of opportunities. Elements like knowledge transfer and mentorship can often seem out of reach, particularly for those at the beginning of these transitions.

This personal perspective was tragically echoed nearly three years ago. On May 25th, 2020, the world witnessed the heartbreaking tragedy of George Floyd’s murder at the intersection of 38th and Chicago Ave in Minneapolis, a location not far from our offices. The events etched George Floyd’s name into our collective memory, catalyzing a global outcry against systemic racism and underscoring the persistent racial disparities afflicting our communities.

This tragedy led my team and me to ponder deeply on the systemic disparities that exist in our own professional sphere in Intellectual Property (IP), and to listen carefully to those impacted by the effects of injustice. I recalled what one of my mentors taught me year ago: "if you endow people with skills and mentors, they will succeed." With this background, we decided to act, and the SLW Academy was born.

Wednesday, May 24, 2023

All Together Now: Highlights from the First Innovator Diversity Pilots Conference

Guest Post by Margo A. Bagley, Asa Griggs Candler Professor of Law, Emory University School of Law, co-inventor, and Principal, Diversity Pilots Initiative. Watch her video for Invent Together, entitled Challenges Encountered as a Diverse Inventor.

This post is part of a series by the Diversity Pilots Initiative, which advances inclusive innovation through rigorous research. The first blog in the series is here, and resources from the first conference of the initiative are available here.

In addition to being Associate Dean for Research and Asa Griggs Candler Professor of Law at Emory University School of Law, I am an African-American woman, co-inventor on two patents, patent attorney and law professor, author of numerous articles, chapters, and books on patent law, and advisor on patent issues to governments and international organizations. And yet, it is my firsthand experience, as a member of groups that have been systematically underrepresented and overlooked in the innovation ecosystem, that gives me a deep understanding and resolve to champion diversity and inclusion in innovation and led me to co-organize, with Professor Colleen Chien and personnel from the USPTO, the first Innovator Diversity Pilots conference held at Santa Clara Law School on November 18, 2022. (video recordings and slides available here.) This blog post, and others to follow in the series, will highlight practices that have been or will be tried, tested and evaluated to increase diversity in innovation.

Tuesday, April 25, 2023

Are NDAs unenforceable when they protect more than trade secrets?

Are NDAs unenforceable when they protect more than trade secrets? The standard answer is no. NDAs can prevent disclosure of contractually-defined "confidential" information that is shared in the course of a confidential relationship, even if it is not technically a trade secret. NDAs can, in other words, go beyond trade secrecy.

NDAs have also not traditionally been treated as contracts in restraint of trade, like noncompetes are. An NDA's purpose is, ostensibly, just to protect secrets. Similar to trade secret law, NDAs only prevent an employee from disclosing (and using outside authorization) specifically-defined information. They don't prohibit competition per se. NDAs are thus seen as comparatively "narrow restraints" which, all else being equal, should be preferred to noncompetes.

Or at least that is the common wisdom. Although there is some support for this viewpoint in treatises and judicial dicta, our new article, Beyond Trade Secrecy: Confidentiality Agreements That Act Like Noncompetes, shows that a growing contingent of courts across jurisdictions are finding NDAs in employment agreements to be unenforceable when they reach too far beyond trade secrecy. Even Google's NDA was recently found unenforceable by a California court, because it did not make sure employees could use or share skills they learned at Google with prospective employers. (That said, the Google opinion is quite extreme, even compared to others we reviewed. See pp. 8-11 of the opinion, Doe v. Google, Inc., Case No. CGC-16-556034 (Cal. Super. Ct., Cty. of San Francisco, Jan. 13, 2022)).

The article is available on SSRN and is forthcoming in Yale Law Journal. It is co-authored by me and Chris Seaman. This blog post is cross- posted on Patently-O

Monday, April 24, 2023

Too Much of a Good Thing: Jake Linford on Copyright & Attention Scarcity

In his fascinating 2020 article in Cardozo Law Review, entitled Copyright and Attention Scarcity, Jake Linford provided a new justification for copyright law's barriers against derivative content—saving the overtaxed attention spans of copyrights' beleaguered audience. If readers and viewers got as much unauthorized derivative works as they wanted, Linford suggested, they would be unable to find the time and energy to read, watch, and sort through all of the derivatives available to them. By giving original authors the right to control derivative works, copyright law protects the audience from content overload.

I loved the article and really appreciated Linford's creative use of the literature on "attention scarcity." That said, as a viewer and reader, I am not sure I like where the thesis leaves me. Speaking for myself, when I am tired and overloaded, the last thing I want is more originals. I want to return to my old favorites through a new lens; I want a sequel, a prequel, or a re-make. Whether these derivatives are authorized or un-authorized matters less to me than whether they are familiar and easy to get into without a lot of legwork. (I do want to know whether the content is made by or authorized by the original creator. But trademark law protects consumers from being misled as to source. Thanks to trademark law, I would know when the newest Star Wars is authorized by Disney and when it's not.)

I am about three years behind with this post. My excuse, besides the pandemic, is that I felt it necessary to watch all seasons of Cobra Kai, along with the films in the original Karate Kid franchise, plus the entire library of Disney Plus, to fully research a response.